Preface

This website contains labs for CSCI 241. They are very much a work-in-progress. There are definitely errors, typos, misspellings, confusing portions, and probably things that are just plane wrong (like that use of “plane”). If you encounter anything confusing, please don’t hesitate to ask.

All corrections, big or small, are gladly welcome!

At the top of this page, you’ll find three icons. The left shows or hides the table of contents. The middle one lets you pick a theme for this book (go ahead and pick one you like). The right one lets you search the labs.

Once we get to the labs where you’ll be programming in Rust, there are a bunch of code samples. Many of these (but not all of them) are executable.

Here’s an example of runnable code.

fn main() { println!("Welcome to CSCI 241!"); }

Mouse over the block of code above and click the Run button to see the output.

The code you write in these labs is designed to run on the lab machines. There is also a virtual machine you may connect to remotely if needed. See the remote coding instructions for details.

Throughout the labs, you’ll find a number of colorful blocks like the example above. Here are a few you’ll encounter in the labs.

Example boxes contain important examples, usually of code that’s similar to the code you will be writing.

Tip or hint boxes contain important information that you’re almost certain to want to use in your implementations.

There are a variety of other boxes, including note, info, and caution. At this point, they have no fixed meaning. As the labs are improved, this may change.

Remote coding

All of the labs can be completed using the lab computers in King 137.

Additionally, there is a virtual machine mcnulty (mcnulty.cs.oberlin.edu)

that you can connect to remotely. This instructions for connecting depend on

if you are connecting from on campus or off campus.

In a terminal, you can ssh to mcnulty.cs.oberlin.edu. This gives you a

shell on the virtual machine. You will have the same home directory as the lab

machines. Any changes you make in the VM will be reflected in the lab

machines, and vice versa.

You can also use Visual Studio Code to connect to mcnulty. This also uses SSH.

This lets you program remotely using the standard VS Code interface. I find it

pretty convenient. You can also access the shell on the remote machine using

VS Code’s Terminal window as usual.

On campus

From on campus, you can ssh to mcnulty entering the following command into a

terminal. (The $ indicates the prompt; don’t type it yourself.)

$ ssh username@mcnulty.cs.oberlin.edu

where username is the username you use to log into the lab computers.

Off campus

From off campus, the mcnulty VM is not reachable. You need to tell SSH to “proxy

jump” through occs.cs.oberlin.edu. Fortunately, this isn’t much more

difficult. You just need to pass an option to ssh:

$ ssh -J username@occs.cs.oberlin.edu username@mcnulty.cs.oberlin.edu

Visual Studio Code

To connect to mcnulty using VS Code, open a new window File > New Window and in

the welcome screen of the new window, click the Connect to… link. Select the

option Connect to Host… Remote-SSH.

The first time you do this, you’ll have to enter the SSH connection details by

clicking + Add new SSH Host….

Then you need to enter the full ssh command (either the on-campus command or

the off-campus command from above).

VS Code will then ask which ssh_config file to update and gives several

options. On macOS and Linux, you want the one in your home directory that’s in

.ssh/config.

Once you’ve done this, click on the Connect to… link and then

Connect to Host… Remote-SSH again. Select the host you just configured.

At this point it will ask you to enter the password you use to log in to the

lab machines. If you are off campus, you’ll have to enter the password twice.

Once for occs, and once for mcnulty.

VS Code will make your .ssh/config file group writeable by default. However, this may

cause an error when you try ssh into mcnulty. To fix this, open Terminal, and type in

the following command: chmod g-w .ssh/config.

The documentation for using SSH with VS Code is available here.

The Visual Studio Code extensions you have installed locally, such as

rust-analyzer, may not be installed on the remote machine. If this is the

case, then after connecting to the remote machine, you can install the

extensions as normal (click on the 4 boxes icon, select the extension you

want, and then click Install in SSH: mcnulty).

Lab 0. Getting Started

Welcome to the 0th lab in CS 241! In this short lab, you’re going to

- Log in to a lab machine;

- Install Visual Studio Code extensions;

- Install Visual Studio Code extensions remotely; and

- Debug a short Bash program.

This lab is to be completed individually.

Setup

To get started, first log into the lab machine.

If the lab machine is currently running Windows, you’ll need to restart it. During the boot process, a text screen with different operating systems will pop up for 5 seconds. When this pops up, use the keyboard to select the Ubuntu option (which will probably be the second in the list, just under Windows).

Once you have logged in, open the Visual Studio Code application. To do so,

click the Show Applications button in the bottom left corner of the desktop,

and then open Visual Studio Code.

Move to Part 1 to install the extensions.

Part 1. Installing extensions

In this part, you’re going to install four extensions,

Remote - SSH— this lets you connect to remote servers using Secure SHell (SSH);ShellCheck— this checks your shell scripts for common errors;rust-analyzer— this greatly eases the task of programming in Rust; andCodeLLDB— this is the debugger we’ll use to debug Rust programs.

Your task

Click on the 4-boxes icon on the left side of the Visual Studio Code window.

This opens the Extensions Marketplace. In the textbox at the top left, enter

the name of the extension you want to install. Click on the matching extension

below the text box and then click the Install button.

After installing an extension, it may ask you to reload Visual Studio Code. Go ahead and do so.

Install all four extensions listed above.

Part 2. Installing remote extensions

In this part, you’re going to connect to a remote server, mcnulty, using

Visual Studio Code and install the ShellCheck, rust-analyzer, and

CodeLLDB extensions.

Your task

Start by reading the Remote coding page.

Follow the instructions on the Remote

coding page for Visual Studio Code to

connect to mcnulty.

At this point, follow the steps in Part 1 to install the three

extensions. This time, rather than an install button, you should see an

Install in SSH button.

In the future, you’ll be able to connect to mcnulty from an installation of

Visual Studio Code on your own machines, even if you’re not in the lab. All of

the files on your lab machine should also be available on mcnulty.

Part 3. Debugging a shell script

In this final part, you’re going to use the ShellCheck extension you just

installed to debug a short Bash program.

Your task

Create a new file in Visual Studio Code by selecting New File… from the

File menu. Name the file hello.sh and save it in your home directory.

Copy the short program below into hello.sh and save the file.

#!/bin/bash

if [[ $# -gt 0 ]]; then

user="$1"

else

user="$(whoami)"

fi

echo Hello ${user}, welcome to CS 241

The line that starts with #! is called a

shebang and it tells the

operating system what program to use to run the file, namely /bin/bash.

Next is a somewhat unusual looking if statement that sets the variable

user. If the user runs the script and passes foo as an argument like this

$ bash hello.sh foo

then user will be set to foo. If the user runs the script with no arguments like this

$ bash hello.sh

then user will be set to name of the current user by calling the whoami program.

The final line of the program prints text. Notice that ${user} is used to

get the value of the user variable. (You can also use $user without the

braces, but there are situations where the braces are required so I tend to

use braces all the time.)

Open a terminal in VS Code by pressing Ctrl-` (control-backtick). Run the program a few times with different arguments and pay close attention to how the program behaves.

$ bash hello.sh

$ bash hello.sh Stu Dent

$ bash hello.sh "Stu Dent"

$ bash hello.sh "Stu Dent"

Notice that $ bash hello.sh Stu Dent didn’t include the Dent portion

whereas when the quotation marks were used, the full name appeared. Bash uses

spaces to split the command into different parts. This is called word splitting.

Stu and Dent became

separate arguments passed to hello.sh. By using quotation marks, the whole

string stays together as a single argument.

This will come up often when working with the terminal.

You may have noticed strange behavior with the final two examples. Namely,

"Stu Dent" and "Stu Dent" behaved identically:

$ bash hello.sh "Stu Dent"

Hello Stu Dent, welcome to CS 241

Where did the extra spaces go?

Look in VS Code and you’ll notice that ${user} has a squiggly line under it.

Mouse over the line and you’ll see that the ShellCheck extension has

reported an error here. You can see a list of all problems by opening the

Problems pane by selecting Problems from the View menu.

ShellCheck says, “Double quote to prevent globbing and word splitting.

shellcheck(SC2086).” Actually, the ShellCheck extension merely runs the

shellcheck program. If we run shellcheck ourselves using the terminal as

shown below, it will give us some more detail:

$ shellcheck hello.sh

In hello.sh line 9:

echo Hello ${user}, welcome to CS 241

^-----^ SC2086 (info): Double quote to prevent globbing and word splitting.

Did you mean:

echo Hello "${user}", welcome to CS 241

For more information:

https://www.shellcheck.net/wiki/SC2086 -- Double quote to prevent globbing ...

In addition to showing the error, it also suggests a fix and provides a link for more information.

The underlying problem here is that ${user} expanded to

Stu Dent

but this underwent word splitting when evaluating the echo line. echo

takes any number of arguments and prints them out in a line with a single

space between them. So when executing the line

echo Hello ${user}, welcome to CS 241

it first expanded ${user} giving

echo Hello Stu Dent, welcome to CS 241

This is split by spaces and thus executes exactly as if it had been written like this.

echo Hello Stu Dent, welcome to CS 241

Modify hello.sh by following the suggestion given by shellcheck and save

your file. Run the program again. This time, any spaces should be preserved in

the argument.

In general, when programming in Bash, you want to double-quote variables otherwise the variables undergo word splitting and you almost never want that.

See the ShellCheck Wiki for error SC2086 for more explanation as well as a

suggestion for a better way to use quotation marks in the echo line in

hello.sh.

Part 4. Getting Ready For Class

In this course, we will use a number of different online resources. In this part of the lab, we will make sure you are set up and ready to go.

Gradescope

Go to gradescope.com and log in using your oberlin.edu email account. (You may need to create an account.) When you log in, you should see CSCI 241 listed under Your Courses. If you click on the course, you should see some Reading Question assignments. You will need to complete these before class on the date listed on them.

If you do not see CSCI 241 listed as one of your courses, let your instructor know.

Syllabus

Please take a minute to read through the syllabus for this course (linked from Blackboard).

Submitting

In the other labs, you will be using GitHub to submit your assignments.

For Lab 0, call over the professor or lab helper, introduce yourself, and show your completed program.

Once you’ve done this, the professor or lab helper will mark that you have completed the lab.

At this point, you’re all done. Don’t forget to log out of the lab machines!

Lab 1. Introduction to Bash

Due: 2026-02-16 at 23:59

In this lab, you will explore the Bash shell and learn to use some of the standard command line utilities to

- navigate the file system;

- list the contents of a directory;

- create, move/rename, and delete files and directories;

- download files from the internet;

- read manual pages to learn how to use these utilities; and

- upload files to GitHub.

This lab is to be completed individually.

Setup

Log in to one of the lab machines and open a terminal by clicking the Show Applications button in the lower left corner of the desktop and open

Terminal.

To get started, continue on to Part 1!

Command Reference

For your reference, the following is a list of relevant commands and their descriptions.

cdChanges current working directorylsLists the contents of a diretorypwdPrints the absolute path of the current working directorymkdirCreates a new directorycpCopies files or (with a suitable option) directoriesrmRemoves files or (with a suitable option) directoriesmvMoves (or renames) files and directoriesrmdirRemoves an empty directory (this is less commonly used thanrmfor this operation)manThis shows a manual page which provides a bunch of information about many of the shell commands and programswgetDownloads files from the InternetgrepSearches text files for a given pattern

Part 1. Navigating the file system

The file system is a hierarchical arrangement of files, grouped into

directories. The hierarchy forms a

tree of the sort you

learned about in CSCI 151. Each file and directory has a name and we can refer

to any file in the file system by giving a path through the tree, starting

at the root directory and using / to separate the directories along the

path.

Here are some example paths.

/The root directory itself/bin/bashThe programbash(this is the shell we’re using right now!) which lives inside the directorybinwhich lives inside the root directory/usr/bin/wgetThe programwget(this program will let us download files from the internet) which lives in the directory/usr/bin

Naming files by starting at the root / all the time is time-consuming. Each

program has a notion of its current directory. We can also name files by

giving a path from the current directory.

Here are some examples of relative paths.

paper.pdfThe filepaper.pdfinside the current directoryMusic/swift.mp3The fileswift.mp3inside theMusicdirectory inside the current directory

The terminal you opened launched the bash shell and it should be currently

running. You should see a prompt in the terminal that looks like

user@mcnulty:~$ (yours will have a different username and hostname—the name of

the computer). From here on out, the prompt will be specified only as $. You

should not type the $ character when entering input.

Bash has several commands for navigating the file system and listing the contents. The most commonly used commands are

-

cdchange current directory;$ cd /usr/binchanges the current directory to/usr/bin; with no arguments,cdchanges the current directory to your home directory -

lslists the contents of a directory;$ ls /tmplists the contents of the directory for temporary files,/tmp; with no arguments,lslists the contents of the current directory -

pwdprints the current directory (the name stands for “print working directory” where “working directory” just means the current directory).By convention, files and directories that start with a period are hidden from directory listings. If you want to see all files, including hidden ones, use

$ ls -a.Every directory has two directory entries named

.and... The single period,., is the entry for the directory itself. Two periods,.., is the entry for the parent directory.Perhaps the most common use of

..is to change to the parent directory using$ cd ... For example, after changing to a directory like$ cd foo/bar/, I can return to thefoodirectory by running$ cd ...

Your task

Create a file named task1.txt using a text editor. To do so, click the Show Applications button in the bottom left corner of the desktop, and then open

Text Editor.

You may also use a command-line editor like emacs or vim. Both are available on mcnulty. To open them, you can open terminal and simply type emacs {filename} or vim {filename}.

Using the shell commands cd and ls, find two different files or

directories in each of the following directories

//usr/usr/bin/etc

Write the names of the 8 different files (and which directory they’re in) in

task1.txt. Save this file in your home directory for now. We’re going to

move it later.

The shell expands a tilde, ~, into the path for your home directory. Thus, you can, for example, change back to your home directory using $ cd ~. You probably want to do so now.

You may construct paths relative to your home directory by starting the path with ~/. For example, the shell will expand ~/foo/bar into the path to the foo/bar file or directory inside your home directory.

Note that the shell treats an unquoted ~ differently than a quoted tilde, '~' or "~". Run the command $ echo ~ '~' "~" to see the difference.

Use $ ls ~ to print the contents of your home directory. You should see task1.txt

in that directory listing.

Use $ cat ~/task1.txt to print the contents of the file to the terminal.

Return to your home directory by running $ cd (with no arguments) or

equivalently $ cd ~.

Part 2. Manipulating files and directories

In Part 1, you created a file in your home directory using a text editor and

you were able to see that file in the file system using the ls command. Now,

we’re going to do the opposite: We’re going to manipulate files on the command

line and examine the results in the GUI—the graphical user interface.

We’re going to be using some new commands.

mkdirCreates a new directorycpCopies files or (with a suitable option) directoriesrmRemoves files or (with a suitable option) directoriesmvMoves (or renames) files and directoriesrmdirRemoves an empty directory (this is less commonly used thanrmfor this operation)manThis shows a manual page which provides a bunch of information about many of the shell commands and programs. Note thatmanuses thelesspager by defaultwgetDownloads files from the Internet

Your task

Create the directory cs241/lab1 in your home directory using the mkdir

command. (If your current directory is not your home directory, you can run $ cd or equivalently $ cd ~ to change to your home directory.)

If you try the “obvious” command, you’ll likely encounter a confusing error.

$ mkdir cs241/lab1

mkdir: cannot create directory ‘cs241/lab1’: No such file or directory

The problem here isn’t that cs241/lab1 doesn’t exist (which is good because

we’re trying to create this directory), the problem is that the cs241

directory doesn’t exist. It’d be great if the error message reflected which

component of the path didn’t exist, but alas, it does not.

In general, pay close attention to error messages but be aware that when the error message is about a file path, it could be about any component of that path.

To create the cs241/lab1 directory, you can use two invocations of mkdir,

first to create cs241 and second to create lab1 within it.

Move the file task1.txt you created in Part 1 inside the cs241/lab1

directory using mv. The basic syntax for the mv command is

$ mv src dest

Keep in mind that when naming a file with a path, you either need to give it

an absolute path starting with / or you need to give it a path from the

current directory. Using ~ for your home directory can be a big time saver

when the files you want to use are in your home directory (or in a directory

in your home directory).

Open your home directory using the Files application from the left sidebar

in the desktop. The task1.txt file should not be there. Open the

cs241/lab1 directory. This should contain task1.txt and nothing else.

Create a directory named books in the lab1 directory using mkdir (you

should see the new directory appear in Files).

Run $ man wget to pull up the manual page for the wget program which you

can use to download files from the Internet. Press q to exit man when

you’re done. Run $ wget --help to see similar information.

cd into the books directory you created and use wget to download a copy

of Bram Stoker’s Dracula from

https://www.gutenberg.org/cache/epub/345/pg345.txt.

Rename the file from pg345.txt to Stoker, Bram - Dracula.txt. Note that

this name has spaces in it. You’ll need to specify it as 'Stoker, Bram - Dracula.txt' or Stoker,\ Bram\ -\ Dracula.txt.

Use wget again to download James Joyce’s Dubliners from

https://www.gutenberg.org/files/2814/2814-0.txt. Rename it Joyce, James - Dubliners.txt.

Different versions of ls will print file names with spaces in them differently. Some versions simply print the file names with the spaces. Others print the file names with quotes around them. When you run ls inside the books directory, you may see something like this.

$ ls

'Joyce, James - Dubliners.txt' 'Stoker, Bram - Dracula.txt'

At this point, the files and directories in your cs241 directory should look

like this.

cs241/

└── lab1

├── books

│ ├── Joyce, James - Dubliners.txt

│ └── Stoker, Bram - Dracula.txt

└── task1.txt

Part 3. Grepping

The grep command is used for searching for text files for a “pattern” and

printing out each line that matches the pattern. For example, $ grep vampire 'Stoker, Bram - Dracula.txt' prints out each line containing the word

vampire (in lower case). Try it out!

Read grep’s man page to figure out how to perform a case-insensitive search

and run the command to print out all lines matching vampire, case

insensitively. Hint: typing /case (and then hit enter) while viewing a man

page will search for case in the manual. While searching, you can press

n/N to go to the next/previous instance.

Your task

Using a text editor, create the file task3.txt in the lab1 directory.

Use grep to print out a count of the lines matching vampire case

insensitively. (Search the man page again.) Write the command you used and

its output in task3.txt

Open the man page for grep one final time and figure out how to get grep

to print the line numbers (and the lines themselves) that match Transylvania

and then do that. Write the command you used and its output in task3.txt.

Find and use the command to print out a count of every word in both books. Use

the apropos command to search manual pages for keywords. The -a (or

--and) option to apropos lets you search for commands that involve all of

the key words so $ apropos -a apple sauce will match commands whose

descriptions contain both the words apple and sauce. So use apropos -a with

appropriate keywords to find a command that produces a word count.

Run that command on all .txt files in the books directory using

$ cmd books/*.txt

where cmd is the command you found with apropos.

The * is called a glob and Bash replaces it with all files that match. In

this case, books/*.txt will be replaced with paths to files in the books

directory that end with .txt. Globs are powerful and make working with

multiple files with similar names easy.

Put the command you ran and its output in task3.txt.

Finally, read the man page for the command to count words in files and find

the option to only print line counts. Use the command on both files. Put the

command and the output in task3.txt.

Submitting (5 points)

In this course, we’re going to be using GitHub. GitHub uses Git which is a version control system used by programmers. We’re going to learn a lot more about Git later, but for now, let’s use its most basic functionality.

Go to GitHub and create an account, if you don’t already have one. Many of the courses in the computer science major will require using GitHub so you’ll be well-served by getting comfortable with it now.

Go to the GitHub Classroom assignment page and accept the assignment. Wait a few seconds and then reload the page and you should see “You’re ready to go!” Click the link for the assignment repository.

The repository it created should be at a URL like

https://github.com/systems-programming/241-lab1-accountname. The last component

of the URL will be some combination of the lab name and your GitHub username.

You will need this in the cloning step below.

Next, you need to clone the assignment repository which will download all of

the files (if any) in the remote repository on GitHub to your computer. To do

this, you’ll need to use gh to authenticate to GitHub and then perform the clone.

gh is the command-line tool for working with GitHub. Most of the time, you

will use plain Git commands, but for authentication and cloning repositories,

you’ll use gh.

In a terminal, run the command

$ gh auth login

and then using the arrow keys, select GitHub.com and press enter. Select

HTTPS for the preferred protocol and press enter. When it asks to

authenticate Git with your GitHub credentials, type Y and press enter. When

it asks how you’d like to authenticate, select Login with a web browser and

press enter. Copy the one-time code it displays and then press enter to open

the browser. If it cannot open the browser, you can click

here.

In either case, paste the code you copied and login (which should only be

required if you have logged out of GitHub). Click the Authorize github

button on the web page. At this point, you should see something like

✓ Authentication complete.

- gh config set -h github.com git_protocol https

✓ Configured git protocol

✓ Logged in as accountname

in the terminal.

Now you’re ready to clone your repository. (In future labs, you shouldn’t need to authenticate again unless you log out.) Run

$ gh repo clone systems-programming/241-lab1-accountname

where lab1-accountname should match the URL for the repository described above.

At this point, you should have a directory named lab1-accountname (where,

again, the actual name is specific to your repository). This is your

newly-created local repository.

Copy all of the files and directories from lab1 into the newly-created local

repository’s directory. Read the man page for cp to figure out how to

recursively copy directories.

cd to your local repository directory. The contents of this directory

(recursively) should look like this.

repo

├── books

│ ├── Joyce, James - Dubliners.txt

│ └── Stoker, Bram - Dracula.txt

├── task1.txt

└── task3.txt

We want to add the files to the repository, commit them, and then push them to GitHub. (We’ll talk about why there are separate steps later. You can think of these three operations as “inform Git about the current state of the files,” “have Git make a snapshot of the current state of the repository,” and “tell GitHub about the changes we made in the local repository.”

These are the commands you need to run.

$ git add books task1.txt task3.txt

$ git commit -m 'Submitting lab 1'

$ git push

If you make changes to a file, you need to run $ git add and pass it the path of the file you changed again. And then you’ll need to run $ git commit and finally $ git push.

The -m 'Submitting lab 1' causes Git to create a commit message with the

contents Submitting lab 1. Normally, we’ll want to create more descriptive

messages, but this is fine for now.

At this point, you’re done! You can check that everything worked correctly by going back to your repository on GitHub and checking that all of the files and directories show up. You should see two directories and two files:

.githubbookstask1.txttask3.txt

The .github directory was added automatically by GitHub Classroom. So why

didn’t it show up in your local repo? Because its name starts with a period!

Recall that files whose names start with a period are hidden. You can see the hidden files

using ls -a. If you do this, you’ll also see a .git directory

too. .git is where Git stores its local configuration.

Finally, you can remove your lab1 directory that we started with now that

you’ve copied everything over to your local repository for lab 1. Since lab1

isn’t empty, $ rmdir lab1 won’t work to remove it. And since it’s a

directory, $ rm lab1 won’t work either! Go ahead and try both commands out.

Read the man page for rm to figure out how to remove directories

recursively.

Following the instructions above regarding which files should appear in your repository and which must be removed is worth 5 points. Every subsequent lab also has 5 free points for following the submission directions.

Lab 2. Shell scripting

Due: 2026-02-23 at 23:59

Shell scripts show up all over the place from programs on your computer to building software to running test suites as part of continuous integration. Learning to write (often short) Bash scripts can be a massive productivity boost as you can automate many tedious tasks. For example, if you find yourself needing to run a sequence of shell commands multiple times, a shell script is often the perfect tool. No more forgetting an argument or accidentally running the commands out of order. Write it once, run it many times.

In this lab, you will learn

- to write Bash shell scripts;

- how to run programs multiple times with different arguments;

- how to use tools to analyze your scripts for common errors.

Preliminaries

First, find a partner. You’re allowed to work by yourself, but I highly recommend working with a partner. If you choose to work with a partner, you and your partner must complete the entire project together. Dividing the project up into pieces and having each partner complete a part of it on their own will be considered a violation of the honor code. Both you and your partner are expected to fully understand all of the code you submit.

Click on the assignment link. One partner should create a new team. The second partner should click the link and choose the appropriate team. (Please don’t choose the wrong team, there’s a maximum of two people and if you join the wrong one, you’ll prevent the correct person from joining.)

Once you have accepted the assignment and created/joined a team, you can clone the repository and begin working. But before you do, read the entire assignment and be sure to check out the expected coding style.

Be sure to ask any questions on Ed.

Coding style

For all of the shell scripts you write, I recommend you follow the Google Shell Style Guide. Look at the section on formatting. These rules are, in many ways, arbitrary. However, it’s good to stick to one style. The guide says to use two spaces to indent. Use whatever you’d like, but be consistent.

Once you open a shell script, the bottom of the window should show something like

Ln 1, Col 1 Spaces: 4 UTF-8 LF Shell Script

You can change the number of spaces by clicking on Space: 4, then Indent using spaces and then select 2 (to match the Shell Style Guide).

If you use NeoVim or Vim as your editor, you can include the line (called a modeline)

# vim: set sw=2 sts=2 ts=8 et:

at the bottom of each of your scripts to force Vim to indent by 2 spaces and to ensure that tabs will insert spaces (to match the Shell Style Guide). This requires “modeline” support to be enabled which is disabled by default. You can enable it by adding the line

set modeline

to your ~/.vimrc file, creating it if necessary.

You can also set options in your ~/.vimrc file, creating one if necessary.

For example, on mcnulty, I have the simple ~/.vimrc.

set background=dark

filetype plugin indent on

autocmd FileType sh setlocal shiftwidth=2 softtabstop=2 tabstop=8 expandtab

set modeline

The first line tells Vim to use colors suitable for a terminal with a dark background. The second line tells Vim to use file-type aware indenting. The third line tells Vim to set those options for shell script files. See the Vim wiki for more details. And the fourth enables modelines.

After you write the #!/bin/bash line at the top of your file (or a modeline

at the bottom), you’ll probably want to reopen the file so that Vim knows it’s

a bash file and turns on the appropriate syntax highlighting and indentation.

If you use emacs, you’re kind of on your own. Feel free to ask on Ed, search StackOverflow, and read the Emacs Wiki.

Same with Nano. This might be useful.

Run time errors and return values

For each of the parts below that ask you to print out a usage message or an

error message, this message should be printed to stderr. You can use code

like this to print a usage message.

echo "Usage: $0 arguments" >&2

Any errors should cause the script to exit with a nonzero value (1 is a pretty

good choice). Scripts that run successfully should exit with value 0. You can

use exit 1 to exit with the value 1.

Script warnings and errors

Make sure your scripts pass shellcheck without errors or warnings. If you

disable a particular warning, you must leave a comment giving a very good

reason why you did so.

Executable scripts

All of your scripts should start with the line

#!/bin/bash

and must be executable. Running $ chmod +x on each of the files before

adding them to Git is sufficient.

Script documentation

Each of your scripts should contain a comment at the top of the script (below

the #!/bin/bash line) that contains usage information plus a description of

what the program does.

Example.

#!/bin/bash

# Usage: shellcheckall [dir]

#

# The dir parameter should be the path to a directory. Shellcheckall will...

To get started, continue on to Part 1!

Part 1. Multiple executions (15 points)

One of the most common tasks when working with computers is running a program multiple times with different inputs. (As just a single example, in the final project for CSCI 210, you first write a program to simulate how a part of the processor operates. You will then run this program with different options on different inputs. This is an error-prone operation. Instead, you could write a simple shell script to run the program all of the different ways you need.)

In this part, you will be writing a shell script to run a program (that is provided to you)

Clone the jfrac repository outside

of your assignment repository. Do not commit the jfrac code to your

assignment repository! The jfrac repository holds the source code for a

simple program, written in the Rust programming language, that generates

images of the Julia set

fractal.

Inside the jfrac directory, run the following command.

$ cargo run -- default.png

The cargo tool is used for building (and in this case running) software

written in Rust.

This will produce an image file that is 800 pixels wide and 800 pixels high

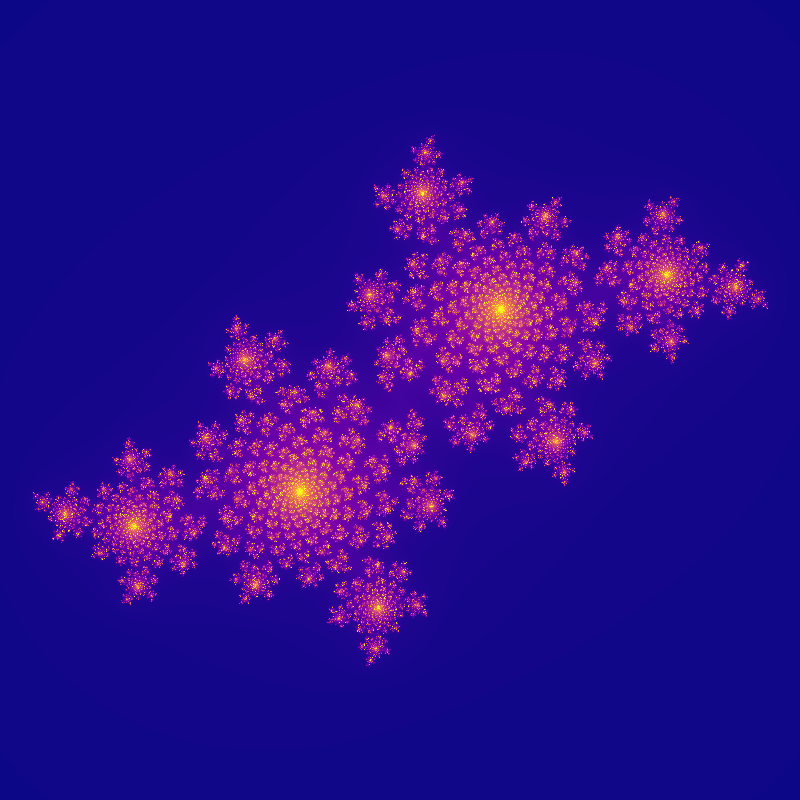

named default.png. It looks like this.

.

.

The mathematics behind the Julia set are explained on the Wikipedia page, but

are not important for us here. What is important is that by changing the

complex number in the equation , we can drastically change

the output fractal. The number is specified by passing the --constant

option to the program. For example, the default constant is so

running

$ cargo run -- --constant="-0.4 + 0.6i" explicit.png

will produce the file explicit.png which is identical to default.png shown

above. The strange -- by itself is required here. Everything before the --

is an option or argument for cargo. Everything after the -- is an option

or argument for the jfrac binary itself.

There’s no man page for jfrac, but it comes with a short help. Run

$ cargo run -- --help

to see the options. This will also show you how to correctly format both short (e.g., -c <value) and long (e.g., --constant <value>) options.

Your task

First, play around with the --constant option to jfrac to produce images

with different constants. Look at the examples given in the quadratic

polynomials

section of Wikipedia for inspiration. You’ll likely want to select values of

such that and . As you work, remember to keep track of the values of c that you like

Find 5 values of that you like.

Your task is to write a Bash script named genfrac which will run the jfrac command

several times (by using cargo run as shown above) using two nested for

loops. You should provide whatever values of you choose as input arguments to your script.

Your script must use positional parameters to access the values.

Your script must generate each of the 5 fractals at 3 different sizes, 200 × 200, 400 × 400, and 800 × 800. You can assume your script is running inside the jfrac directory - however, once you are done testing and running it you will need to move it to your lab2 repository, and make sure not to submit the jfrac directory.

Each file should be named Julia set (constant) size.png. For example, using

the default constant and default size, you would produce the file named Julia set (-0.4 + 0.6i) 800x800.png.

You should have 15 generated images in total. Add those images to your

repository in an images directory.

Part 2. Simple pipeline (5 points)

For several subsequent parts of this lab, you’re going to need to run the scripts you write on a large code base. We’re going to use the source code for the Linux kernel. You’re going to write a one-line shell script that downloads and decompresses the source code.

Your task

Write a one-line script that uses curl to download the compressed Linux

source code and pipes it to tar which extracts the source code into the

current directory. The Linux source code is available at this URL.

https://github.com/torvalds/linux/archive/refs/tags/v6.4.tar.gz

Your command should be structured as follows.

$ curl ARGS | tar ARGS

After you run the command, you should have a new directory named linux-6.4

which contains the source code to version 6.4 of Linux. Do not commit the

Linux source code to your assignment repository.

Write the pipeline command you come up with in a file named README.

Normally, a README provides information about a project. GitHub will display

the contents of your README when you visit the web page for your repository

(after you have pushed the README to GitHub). You’ll use this same file

several times to record the commands you come up with.

- If you run

curl https://github.com/torvalds/linux/archive/refs/tags/v6.4.tar.gzwith no other options, no output is printed. That’s because GitHub, like many websites, will redirect the browser to different URLs. The way this works technically is to use a redirection HTTP status with aLocationheader. Search thecurlman page to figure out which option to use to getcurlto follow the redirection specified in theLocationheader. - The

tarcommand, like many (but sadly not all) command-line utilities that operate on files, will accept a single-as the name of the file to mean read fromstdinrather than reading from the file (and for output, write tostdoutrather than writing to a file). - Notice that the source code is in an archive with the file extension

.gz. This means that the code was archived using the gzip software. You should find ataroption that works with gzip archives.

Part 3. Shell script hygiene (25 points)

Shell scripting is a powerful tool that is used in many areas of software

development, including build scripts (that build the software), test scripts (that

test the software), and conformance checking scripts (that check things like

formatting conventions). An example of a conformance checking script might be

one that is run on every git commit to check that the files being changed

have no errors or warnings according to some tool.

You’re going to write a conformance checking script that checks if all of the

shell scripts in a directory pass shellcheck.

To find all of the shell scripts in a directory, you will use the find

command. Conceptually, we want to loop over all of the files that have a

particular extension and do something with them. For example, if we want to

print the paths of every text file in a directory whose path is stored in the

dir variable, we’d like to be able to do

something like this:

Unfortunately, this doesn’t work! If we run shellcheck on this we get a warning

for file in $(find "${dir}" -name '*.txt'); do

^-- SC2044 (warning): For loops over find output are fragile. Use find -exec or a while read loop.

The problem here is that file names can contain spaces (and even newline characters!). The shellcheck wiki page for error SC2044 gives example code to make this work. It would look something like

while IFS= read -r -d '' file; do

echo "${file}"

done < <(find "${dir}" -name '*.txt' -print0)

Note carefully the space after the = and the space between the two <

characters!

What this arcane construction is doing is it’s first running

find "${dir}" -name '*.txt' -print0

which is the same as the previous find command except that rather than

printing the matching paths separated by a newline (which, as mentioned, is a

valid character in a file name), it separates the output with a 0 byte. (Not

the character 0 which is a byte with integer value 48, but the byte with

value 0.)

The output of the find command becomes the standard input for the

while IFS= read -r -d '' file; do ... done

loop. The loop is going to read from stdin (from the read command) and

split up the input by 0 bytes (which, conveniently, is what the -print0

argument to find produced).

Each time through the body of the loop, the file variable will be set to the

path of one of the files that find found.

Your task

Write a shell script called shellcheckall which takes zero or one parameters.

The parameter, if given, should be a path to a directory. If no parameters are

given, it should act on the current directory. If two or more parameters are

given, output usage information to stderr, and exit with return value 1. If

the supplied parameter is not a directory, output an error message (on

stderr) and exit with return value 1.

The script should search the given directory (and any directories inside of

it) to find all of the files with the extension .sh. It should run

shellcheck on each file and count how many scripts pass out of the total

number of shell scripts. If any of the scripts fail shellcheck, then when

your script exits, it should exit with return value 1. See the examples below,

including the return values.

Make sure you handle files with spaces in the name. Make sure the script works correctly when run on an empty directory and one that contains no shell scripts (see the examples below).

How many shell scripts in the entire linux-6.4 pass shellcheck? Write your

answer in your README.

Write a for-loop that runs shellcheckall on each directory in

linux-6.4. It should print out the name of the directory, a colon, a space,

and then the output from shellcheckall. Your loop should probably start like

this.

for dir in linux-6.4/*/; do

The / after the * means the glob will only match directories. You’ll want

to use echo -n to print text without a trailing newline. See the final

example below.

Put your for-loop and the output in your README.

The following examples echo the return value $? to show that it returns 0 on success and 1 on an error or if a file does not pass shellcheck.

$ ./shellcheckall too many args

Usage: ./shellcheckall [dir]

$ echo $?

1

$ ./shellcheckall linux-6.4/tools/perf

6 of 74 shell scripts passed shellcheck

$ echo $?

1

$ ./shellcheckall empty-directory

0 of 0 shell scripts passed shellcheck

$ echo $?

0

Here’s an example of the for loop output.

$ for dir in linux-6.4/*/; do ...; done

linux-6.4/Documentation/: 1 of 9 shell scripts passed shellcheck

linux-6.4/LICENSES/: 0 of 0 shell scripts passed shellcheck

linux-6.4/arch/: 15 of 39 shell scripts passed shellcheck

...

Part 4. Top file types (20 points)

In this part, you’re going to write a complex pipeline to answer a simple question: What are the top 8 file types in the Linux 6.4 source code? We’re going to use a file’s extension to determine what type of file it is. We’re going to ignore any files that don’t have extensions.

Your task

Write a script topfiletypes to answer this question. Your script should

consist of a single complex pipeline. Put the output of your script in your

README.

The structure of the pipeline will look like this

while IFS= read -r file; do ...; done < <(find linux-6.4 -name '*.*') | ... | ... | ... | ...

Notice how the output from the while loop is passed as standard input to the

next command in the pipeline.

Your output should look like this.

$ ./topfiletypes

32463 c

23745 h

3488 yaml

...

-

The

whileloop given here is slightly different from the one you used before (no-d ''or-print0options). Most filenames don’t actually have newlines in them so we can use this technique to read one line at a time from the output of thefindcommand rather than reading up to a 0 byte. -

As discussed in lecture, Bash supports a lot of convenient variable expansions. You can use this inside your

whileloop to print the extension of the file. -

Once you print out the extension, you will want to sort the output alphabetically. You can run

apropos -a <search term>to find a commands that sort lines. -

Then, on the output from the command in 3., you can use a command to omit repeated lines. You can run

apropos -a <search term>again to find this command. -

You should also find an option for that command (look at its man page) to print out the number of occurences of each line.

-

You can use the same command you found in 3. a second time to sort your output from 5. However, this time, you should sort numerically (instead of alphabetically), and in reverse, so the biggest number appears first. Look at the man pages to find the appropriate options.

-

You should then find a command to output the first part of the input, and apply that to your output from 6. You can use this to output the 8 file extensions that appear the most. Find an option that allows you to specify the number of lines the command outputs.

-

In a loop, you will want to print out the file extension and combine all of the commands described above in a pipeline to produce the final result.

-

You can write this all as a single line. Don’t do that. Use

\at the end of each line to continue on the next line.while IFS= read -r file; do echo ... done < <(find linux-6.4 -name '*.*') \ | ... \ | ... \ | ... \ | ...

Submitting (5 points)

To submit your lab, you must commit and push to GitHub before the deadline.

Make sure you followed all of the instructions about stderr and return values, script warnings and errors, executable scripts, and script documentation.

Your repository should contain the following files and directory.

READMEgenfracshellcheckalltopfiletypesimages/

Your repository should not contain either the jfrac or the Linux code.

Any additional files you have added to your repository should be removed from

the main branch. (You’re free to make other branches, if you desire, but

make sure main contains the version of the code you want graded.)

The README should contain

- The names of both partners (or just your name if you worked alone…but please don’t work alone if you can manage it).

- Your answers to questions for each part and the commands you used to find them.

Lab 3. Introduction to Rust

Due: 2026-03-02 at 23:59

Preliminaries

First, find a partner. You’re allowed to work by yourself, but I highly recommend working with a partner. If you choose to work with a partner, you and your partner must complete the entire project together, as a single repository. Dividing the project up into sections and having each partner complete a part of it on their own will be considered a violation of the honor code. Both you and your partner are expected to fully understand all of the code you submit.

Click on the assignment link. One partner should create a new team. The team name cannot be the same name used for a previous lab. The second partner should click the link and choose the appropriate team. (Please don’t choose the wrong team, there’s a maximum of two people and if you join the wrong one, you’ll prevent the correct person from joining.) You cannot choose a team name you’ve used previously.

Once you have accepted the assignment and created/joined a team, you can clone the repository and begin working.

Be sure to ask any questions on Ed.

Compiler warnings and errors

Make sure your code compiles and passes clippy without errors or warnings.

That is, running

$ cargo clean

$ cargo build

$ cargo clippy

should build your program without errors or warnings. Running these commands

will only work if you have cded into a Rust project directory.

Cargo’s clippy is a command-line utility for linting Rust code. A linter automatically analyzes source code for errors, vulnerabilities, and stylistic issues to improve code quality. Cargo clippy will provide suggestions and warnings for potential errors in your code, such as unused variables, unnecessary operations, and more. For example, it will suggest more efficient ways of writing your code and point out common pitfalls that could lead to runtime errors.

VS Code doesn’t always update its error underlining until after you save your file. So make sure to do that with some regularity while you’re writing code.

Formatting

Your code must be formatted by running $ cargo fmt. This will automatically format your code in a consistent Rust style.

Using Mcnulty

If you are working in the lab machines, you should ssh into mcnulty and run your Rust code there. You may be thinking, “what’s the point of using mcnulty?” - if you are, great question! We’ve set up mcnulty so that it already has Rust and its various dependencies correctly installed. This means that all you have to do is log into mcnulty and you can start running your Rust programs. We’ve create a separate VM instead of installing Rust directly on the lab machines for a variety of reasons: the VM is isolated from the CS servers, meaning we can make changes to different software/setup without affecting everyone else using the server. This gives us a greater level of flexibiilty in the software we use, and it makes maintenance more convenient.

You’re welcome to install Rust locally; in this case, you should follow the instructions in 1.1 of the Rust textbook.

To get started on this lab, continue on to Part 1!

Part 1. Guessing game (15 points)

The first application you will write is a number guessing game.

The tutorial you’re going to be following for this part of the lab contains a

discussion of the integer types like i32, i64, and u32. It talks about

how many bits each of them is, 32 or 64, respectively. You’ll learn a lot

more about this in CSCI 210. To relate this to Java, an i32 is Java’s int

type. An i64 is Java’s long type. The u32 and u64 types don’t have

analogues in Java. These are the unsigned types meaning that they can only

hold non-negative values. This is perhaps best demonstrated with an example.

We can print out the maximum and minimum values these types can hold as

follows.

#![allow(unused)] fn main() { println!("An i32 holds values from {} to {}", i32::MIN, i32::MAX); println!("An i64 holds values from {} to {}", i64::MIN, i64::MAX); println!("An u32 holds values from {} to {}", u32::MIN, u32::MAX); println!("An u64 holds values from {} to {}", u64::MIN, u64::MAX); }

Click the Run button to see the results. There are other integer types: i8, i16, u8, u16, isize, and usize. You can get their minimum or maximum values the same way.

Your task

Follow the instructions given in Chapter 2 of the book with the following adjustments:

-

In the section “Setting Up a New Project,” it tells you to go to a

projectsdirectory. Instead,cdinto the assignment repository you cloned. You’ll createguessing_gamein there when you runcargo new guessing_gameIt’s important that you do this from inside the assignment repository. Don’t run

cargo new guessing_gameand then latermvthe directory into the assignment repo.After you run

cargo new guessing_game, open up Visual Studio Code by running$ code guessing_gameThis will open Visual Studio Code which will ask you some questions:

- Do you trust the authors of the code? Yes.

- It detects that the containing directory is the root of a Git repository and it will ask if you want to open it. Say yes and then select the path to the assignment directory from the list displayed (there will only be one entry). Now, you can use Git directly from VS Code’s user interface.

-

When it tells you to run

cargo run, you can do that from your open terminal window (after$ cd guessing_game), or you can use VS Code’s built-in terminal to run it.Let’s configure VS Code to be able to build, run, and debug our code. Click on the

Run and Debugbutton (shaped like a triangle with a bug on it) on the left side of the window. Click the “create a launch.json file” text. It will ask you for a debugger, selectLLDB. This asks if you want to generate launch configurations for its targets. Say yes.You can close the

launch.jsonfile it created and opened.At this point, you can run and debug your application from VS Code.

LLDB is a powerful debugging tool. It allows you to do things like step through code line-by-line (extremely helpful if you’re trying to isolate a specific spot where your program isn’t behaving as expected) and inspect variable values at each step.

To use the debugger in VSCode, you can add a breakpoint in your code. This tells the debugger to stop execution once it gets to the breakpoint, and that will allow you to step through each line, one-by-one, and see what happens when each line executes. To add a breakpoint, move your cursor to the left of the line numbers in VSCode. You should see a red dot; if you click the red dot, this will add a breakpoint. (You can remove the breakpoint by clicking the red dot again.)

When you’re ready to run the program with debugging, you’ll click the Run and Debug button. Once you’re in the Run and Debug menu, you can hit F5 or the green play button on the top left. Your code will then run until the breakpoint, and then it will pause. You’ll see the control menu at the top (there’ll be a triangle play button, buttons with arrows, and a red stop button).

The button to the very left (the triangle) is a Continue button; this will cause your code to execute until it’s finished. To the right is the Step Over button. Hit this if you want to execute the current line of code and pause before executing more. The next two buttons are the Step Into and Step Out buttons. If the current line of code executes a function, Step Into will step into the function, execute the first line of it, and pause. Step Out will finish executing the function and then pause.

At any point during debugging, VSCode will display all of the variables and their values on the lefthand side of the screen.

If you’re done debugging, you can let the program continue to execute, or you can hit the Stop button (the red square).

Once you have finished writing the guessing_game, you should make sure

you’ve added your files to Git and committed them. You can do so either from

the command line, or from within VS Code. Here are the

docs for how to

do it from within VS Code.

Part 2. Iteration (5 points)

Iteration with iterators is a key concept in Rust. Iteration is similar to Java but has some quirks which can be quite surprising.

For the rest of this lab, you’ll explore iterating over elements in a vector and iterating over characters in a string.

Your task

In a shell, cd to your assignment repository and create a new project using

cargo and then open it in VS Code.

$ cargo new iteration

$ code iteration

Change the main function to

fn main() { let data = vec![2, 3, 5, 7, 11, 13]; for x in data { println!("{x}"); } }

Hover your mouse over the code above and some buttons will appear in the top right of the text box. Click the Run button to run this code.

The vec! macro creates a new Vec containing the elements in the

brackets. (Click the link in the previous sentence to go to the documentation

for Vec.) Vec is the standard vector class in Rust: it is a growable array

of elements, similar to a list in Python and an ArrayList in Java. The

vec! macro here gives a result similar to this (but more efficient and

easier to read).

let mut data = Vec::new(); // Create a new Vec

data.push(2); // Add 2 to the end of the Vec.

data.push(3); // Add 3 to the end of the Vec.

// ...

data.push(13); // Add 13 to the end of the Vec.Predict what the output of the code will be and then run it. If you predicted incorrectly, try to figure out why the code does what it does before moving on.

Now, duplicate the for loop

fn main() { let data = vec![2, 3, 5, 7, 11, 13]; for x in data { println!("{x}"); } for x in data { println!("{x}"); } }

Again, predict what the output of the code will be and then run it. (You can click the Run button on the above text box to see the error message.)

I suspect the results are quite surprising. You probably got an error that looks like this.

error[E0382]: use of moved value: `data`

--> src/main.rs:8:14

|

2 | let data = vec![2, 3, 5, 7, 11, 13];

| ---- move occurs because `data` has type `Vec<i32>`, which does not implement the `Copy` trait

3 |

4 | for x in data {

| ---- `data` moved due to this implicit call to `.into_iter()`

...

8 | for x in data {

| ^^^^ value used here after move

|

note: `into_iter` takes ownership of the receiver `self`, which moves `data`

--> /Users/steve/.rustup/toolchains/stable-aarch64-apple-darwin/lib/rustlib/src/rust/library/core/src/iter/traits/collect.rs:262:18

|

262 | fn into_iter(self) -> Self::IntoIter;

| ^^^^

help: consider iterating over a slice of the `Vec<i32>`'s content to avoid moving into the `for` loop

|

4 | for x in &data {

| +

For more information about this error, try `rustc --explain E0382`.

error: could not compile `iteration` (bin "iteration") due to previous error

This is a lot of information and it’s quite difficult to understand at first. Reading error messages takes practice. If you look carefully, you’ll notice that the error message is divided into three parts, the error, a note, and a help.

The error tells us that we have tried to use a moved value, namely data on

line 8, column 14 of the file src/main.rs. Next, it shows that on line 2 the

variable data has type std::vec::Vec<i32> which does not implement Copy.

Then, on line 4, data was moved due an implicit call to .into_iter() and

finally, on line 8, we tried to use data again.

We’ll talk about what this all means but basically, the first for loop used

up or consumed our Vec and thus the Vec no longer exists. This is not how we normally expect for loops to work. Nevertheless, this is how Rust works.

You can think of this as similar to how shift works in Bash. Recall that shift is used for positional parameters; when we call it, it removes the first positional parameter entirely, and shifts the rest of the parameters up by 1. If we use shift to loop through every positional parameter, by the end of the loop, those parameters will be gone, and we cannot access them again. This is what’s happening in our Rust code - by the end of our loop, every item in our Vec is gone, so we can’t access them again in a subsequent loop.

The note portion of the error message tells us why it was used up and shows us code from the standard library to explain why. Let’s skip this part for now.

Finally, the help portion offers a way to solve this problem. If we use for x in &data { } (note the addition of the ampersand &), this will avoid

consuming data. Note that it suggests making this change only on the first

for loop (which for me happens to be on line 4). By using &data rather

than data, we’re instructing the loop to iterate over a reference to the

Vec, rather than the Vec itself.

References do not own data; they simply point to data owned by another variable. A reference is simply the address in memory where we can find the data. The reference tells the for loop that it’s not allowed to consume the data, because the reference doesn’t own the data.

The upshot to using a reference here is that we can now iterate

multiple times.

Go ahead and make that change and rerun cargo run. At this point, your code

should print out the list twice.

Part 3. Reversing a vector (15 points)

In the rest of the lab, you’re going to write some short functions dealing with vectors and strings and you’re going to write some unit tests for the functions.

Continue working in the main.rs file of your iteration project.

Your task

Implement the function reversed_vec.

fn reversed_vec(input_data: &[i32]) -> Vec<i32> {

let mut result: Vec<i32> = Vec::new();

todo!("Finish this function")

}Two things to notice

- The input argument

input_datais of type&[i32]. We’ll talk more about this type later, but for now, think of this as a reference to aVec. We saw this above when we wrotefor x in &data {}wheredatawas aVecso&dataessentially gives us a&[i32]. - The

resultvariable is declared mutable (with themutkeyword) so we can modify it by inserting elements using thepush()function.

Before you remove the todo!() and implement this function, let’s write a

unit test similar to the unit tests you wrote in CSCI 151.

At the bottom of main.rs, add the following code.

#[cfg(test)]

mod tests {

use super::*;

#[test]

fn reversed_vec_empty() {

let data = vec![];

assert_eq!(reversed_vec(&data), vec![]);

}

}There’s a lot to unpack here. The first line, #[cfg(test)] tells the

compiler that the item that follows it should only be compiled when we’re

compiling tests. And that item is a module named test. (The name is

arbitrary but is traditionally called test.) We’ll talk more about modules

later.

The use super::*; line makes all of the functions in main.rs outside the

test module available for use inside the test module.

Finally, each unit test we write is just a Rust function that is annotated

with #[test]. If you omit this annotation, the function will not be treated

as a test.

Run the command $ cargo test. You should see some warnings followed by a

test failure (remember, we didn’t implement reversed_vec() so it makes sense

our test should fail).

running 1 test

test test::reversed_vec_empty ... FAILED

failures:

---- test::reversed_vec_empty stdout ----

thread 'test::reversed_vec_empty' panicked at 'not yet implemented: Finish this function', src/main.rs:19:5

note: run with `RUST_BACKTRACE=1` environment variable to display a backtrace

failures:

test::reversed_vec_empty

test result: FAILED. 0 passed; 1 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Notice that the output tells us our code panicked (i.e., failed) on line 19 of

main.rs.

Before implementing reversed_vec(), write two more tests

#[test]

fn reversed_vec_one() {

todo!();

}

#[test]

fn reversed_vec_three() {

todo!();

}that test the result of reversing a vector with 1 element and a vector with 3 elements.

$ cargo test should now show three failing tests.

Implement the rest of reversed_vec().

- You can create an iterator over

input_datausinginput_data.iter(). Some iterators let you create reverse iterators using therev()function. In other words, given one iteratorit, you can create a new iterator viait.rev()that will iterate in reverse order. Sofor x in input_data.iter().rev() { }will iterate overinput_datain reverse order. - The iterator returned by

input_data.iter()will not make copies of the elements ininput_data. Instead, the value returned by the iterator will be a reference to the elements. That is, in

the type offor x in input_data.iter() { }xis&i32and noti32. Since ourresultvector holdsi32and not&i32, we need to dereference the referencexto get the underlying integer. We use the*operator to do this. For example,

shows the incorrect and correct ways to insert the element intofor x in input_data.iter() { // result.push(x); // Fails because x has type &i32 result.push(*x); // Succeeds because *x has type i32 }result.

You’re probably getting a warning at this point about reversed_vec not being

used. Add the line

#![allow(dead_code)]to the very top of main.rs and it’ll stop warning about the functions that

we’re only using in tests.

Part 4. Checking for in-order data (15 points)

In this part, you’ll implement a new function and write a few more unit tests to make sure your code works.

Your task

Write a function

#![allow(unused)] fn main() { fn is_in_order(data: &[i32]) -> bool { todo!("Implement me") } }

that returns true if the elements of data are in ascending order and false

otherwise. To do this, you’ll want to iterate over data using for x in data {} and check that each element is at least as large as the previous element.

To keep track of the previous element, you’ll need a mutable variable: let mut prev: i32;. You can either use i32::MIN as its initial value or the

value of the first element in data. Either approach works.

Before implementing the function write the following unit tests that should pass once you implement the function.

- Empty vectors are always in order.

- Vectors containing a single element are always in order.

- Vectors with multiple elements, in order.

- Vectors with multiple elements, not in order.

Inside your test module, you’ll want to add a new function per unit test. For example, the test for multiple elements not in order might look something like this.

#![allow(unused)] fn main() { fn is_in_order(data: &[i32]) -> bool { todo!() } #[test] fn is_in_order_multiple_out_of_order() { let test_vector = vec![10, -3, 8, 2, 25]; assert!(!is_in_order(&test_vector)); } }

Notice that this is asserting the negation of is_in_order() because of the !.

Implement is_in_order and make sure it passes your tests.

My output for $ cargo test looks like this.

running 7 tests

test test::is_in_order_empty ... ok

test test::is_in_order_multiple_out_of_order ... ok

test test::reversed_vec_empty ... ok

test test::is_in_order_multiple_in_order ... ok

test test::is_in_order_one ... ok

test test::reversed_vec_one ... ok

test test::reversed_vec_three ... ok

test result: ok. 7 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Part 5. Iterating manually (10 points)

Similar to the previous part, you will implement a function and some unit tests.

Your task

Write a function that iterates over the elements in its argument and adds them all and returns the sum.

#![allow(unused)] fn main() { fn manual_sum(data: &[i32]) -> i32 { let mut data_iter = data.iter(); todo!("Implement me") } }

The catch is that for this task, you may not use a for loop. Instead, you’ll

need to work with the iterator returned by data.iter() directly. Iterators

in Rust work similarly to iterators in Java. To implement this as a Java

method using iterators rather than a for loop we might use something like

this.

int manualSum(ArrayList<Integer> data) {

int sum = 0;

Iterator<Integer> iter = data.iterator();

while (iter.hasNext()) {

sum += iter.next();

}

return sum;

}

Iterators in Rust don’t have separate has_next() and next() methods.

Instead, there’s just a single next() method that returns an

Option<T>. Every Option is either None or Some(blah) for some

value blah. Here’s a simple example of using an Option.

#![allow(unused)] fn main() { let x: Option<i32> = Some(10); assert!(x.is_some()); assert!(!x.is_none()); assert_eq!(x.unwrap(), 10); let y: Option<i32> = None; assert!(y.is_none()); assert!(!y.is_some()); }

We can use is_some() or is_none() to determine if an Option is a

Some or a None. If we have a Some, then we can unwrap it by

calling unwrap() which returns the data. If we try calling unwrap() on

None, we’ll get a runtime error.

Write some unit tests. Make sure you cover all of the following cases.

- Empty

data(where the sum should be 0); - Single-element vectors; and

- Vectors with multiple elements.

Implement manual_sum by calling next() on data_iter repeatedly until the

result is None. Unwrap all of the other values and sum them up.

You’ll probably want a loop like this.

loop {

// XXX: Get the next element from the iterator

if /* the element is None */ {

return sum;

}

// XXX: Unwrap and add the value to the sum.

}Part 6. Iterating over string (10 points)

Strings in Rust are similar to vectors of characters except that, unfortunately, they’re slightly more difficult to use because text is inherently more complicated than vectors.

The most obvious difficulty is you cannot access individual characters in the same way you’d access individual elements of a vector.

#![allow(unused)] fn main() { let x = vec![1, 2, 3]; assert_eq!(x[0], 1); let y = "ABC"; assert_eq!(y[0], 'A'); // FAILS! }

That last line fails (click the Run button to see the error) because you cannot index into a string like this. The problem is that Rust stores all strings in the UTF-8 encoding. This is a variable-length encoding where different characters may take a different number of characters to encode. So if you want to get the 10 th character from a string, for example, you cannot easily figure out where in the encoded data for the string the 10 th character starts without starting at the beginning of the string.

This means that to work with characters, we’re going to need to use an

iterator over the characters. Fortunately, the chars() function returns an

iterator that returns each character.

#![allow(unused)] fn main() { let example = "Here is my string"; for ch in example.chars() { println!("{ch}"); } }

Just as we’ve made a distinction between Vec<i32> and &[i32] where the

latter is a reference to the former, in Rust we have a String data

type and a reference to a string &str. When we have a function that takes a

reference to a string, we use an argument of type &str. Note that strings we

create by enclosing text in quotation marks have type &str and not

String. If we have a String and we want to pass it to a function that

takes a &str, we get a reference to the string using & just as we did with

vectors and reference to vectors.

Your task

Write a function

#![allow(unused)] fn main() { fn clown_case(s: &str) -> String { todo!("Implement me") } }

that takes a &str as input and returns a new String that alternates

capitalization and includes a clown emoji, 🤡, at the beginning and end of the

string as long as s is not the empty string. If s is the empty string,

return just a single clown emoji.

Here are some examples. I recommend you turn them into unit tests before you

implement clown_case.

""->"🤡""I'm just asking questions"->"🤡i'M jUsT aSkInG qUeStIoNs🤡""Μην είσαι κλόουν στα ελληνικά!"->"🤡μΗν ΕίΣαΙ κΛόΟυΝ σΤα ΕλΛηΝιΚά!🤡"

- If

chis achar, you can usech.is_alphabetic()to decide if you want to lowercase or uppercase the letter or just include it in the result unchanged. I.e., only uppercase/lowercase the characters for whichch.is_alphabetic()returns true. - If

chis achar, thench.to_lowercase()andch.to_uppercase()return iterators tochars rather than acharitself. Why? Well, in some languages converting the case changes the number of characters. E.g, a capitalßisSS. You can add the elements of an iterator to a string using theextend()function.#![allow(unused)] fn main() { let mut result = String::new(); result.extend('ß'.to_uppercase()); assert_eq!(result, "SS"); } - You can append a

charto aStringby usingpush(). You can append a&strto aStringby usingpush_str().

At this point you’re done and can submit your code.

However, this is one more optional step you can take if you’d like: Comment

out the existing code in your main() function. (In VS Code, you can comment

or uncomment multiple lines at once by selecting all of the lines and hitting

Ctrl-/.) Write some code in main() to read a line of

input from stdin, pass it to clown_case(), and then print the results. Now

you can run the program and have it turn any input you enter into clown case.

(I have been reliably informed that this is a lot of fun to use.)

Submitting (5 points)

To submit your lab, you must commit and push to GitHub before the deadline.

Your repository should contain the following files and directory.

fa23-lab3-xxx-yyy

├── README

├── guessing_game

│ ├── Cargo.lock

│ ├── Cargo.toml

│ └── src

│ └── main.rs

└── iteration

├── Cargo.lock

├── Cargo.toml

└── src

└── main.rs

Any additional files you have added to your repository should be removed from

the main branch. (You’re free to make other branches, if you desire, but

make sure main contains the version of the code you want graded.)

The README should contain the names of both partners (or just your name if

you worked alone…but please don’t work alone if you can manage it).

Lab 4: Wordle ahead

Due: 2026-03-09 at 23:59

In this lab, you’ll learn how to

- work with individual characters in a string;

- write colored text to the terminal;

- flush output to standard out;

- call functions defined in other modules;

- implement a command-line utility;

- parse command-line arguments;

- read from standard in or from a file; and

- handle errors.

Preliminaries

First, find a partner. You’re allowed to work by yourself, but I highly recommend working with a partner. Click on the assignment link. One partner should create a new team. The team name cannot be the same name used for a previous lab. The second partner should click the link and choose the appropriate team. (Please don’t choose the wrong team, there’s a maximum of two people and if you join the wrong one, you’ll prevent the correct person from joining.) You cannot choose a team name you’ve used previously.

Once you have accepted the assignment and created/joined a team, you can clone the repository and begin working.

Be sure to ask any questions on Ed.

Compiler warnings and errors

Make sure your code compiles and passes clippy without errors or warnings.

That is, running

$ cargo clean

$ cargo build

$ cargo clippy

should build your program without errors or warnings.

Formatting

Your code must be formatted by running cargo fmt.

Part 1. Wordle (35 points)

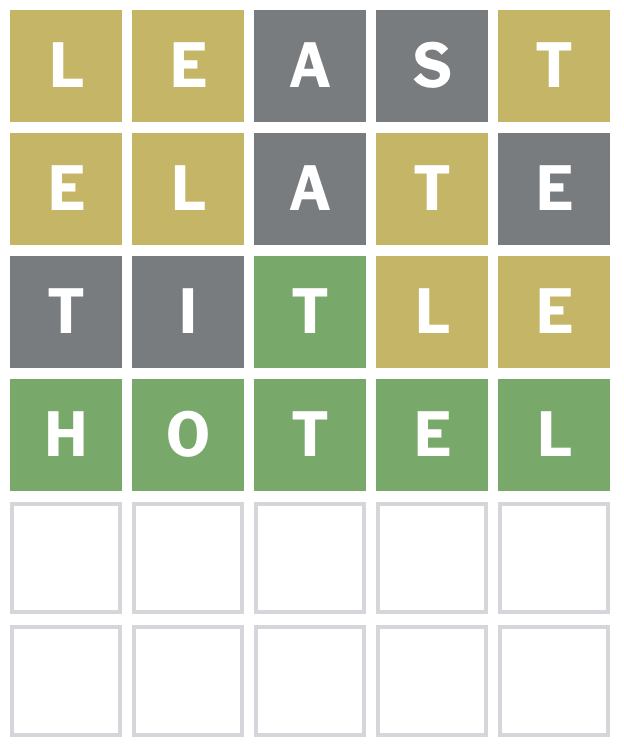

In this part, you’re going to implement a Wordle game. Wordle is a word guessing game. A word is chosen at random from a small list of 5-letter words at the start of the game. The player has 6 chances to guess the word.

Try playing today’s Wordle to see how the rules work!

Guesses that are not valid words are rejected and do not count toward the guess total.

After each (valid) guess, the word appears with each letter colored as follows:

- If a letter is in the correct position, it is colored green.

- If a letter is in the word but in the wrong position, it is colored yellow (but see the example below).